Free speech, as outlined in the Universal Declaration of Human Rights, is not an absolute right and comes with reasonable restrictions aimed at protecting public order, morals, and health. This principle is currently at the heart of a complex debate surrounding Pavel Durov, the founder and CEO of Telegram, following his arrest by French authorities.

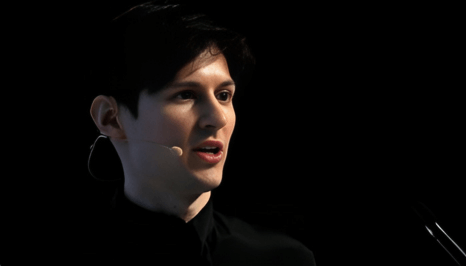

Durov has built Telegram’s reputation on a foundation of unyielding free speech. His platform has become a sanctuary for dissidents and critics of state regimes, often clashing with national governments over its lax content policies. However, Durov’s commitment to absolute free speech is now facing serious scrutiny, especially in light of recent allegations against Telegram involving criminal activities.

French authorities have detained Durov in connection with investigations into the misuse of Telegram for hosting extremism, drug trafficking, scams, and allegedly child pornography. This raises a crucial question: Does Durov’s staunch defense of privacy and free expression justify a hands-off approach that could endanger public safety?

Durov has famously stated, “Privacy… is more important than our fear of bad things happening… and that, to be truly free, you should be ready to risk everything for freedom.” Yet, this philosophy invites debate. Can a platform prioritize privacy and free speech to the extent of ignoring potentially harmful consequences?

Telegram is not just a messaging service but also a social network with significant reach. While its encryption protects user privacy, it does not offer complete end-to-end encryption like other apps, such as Signal. This partial encryption means that Telegram can access certain messages, which complicates its ability to act on requests from law enforcement agencies.

In response to Durov’s arrest, Telegram has defended its content moderation practices as “within industry standards” and questioned its own liability for abuses of the platform. However, if investigations reveal that Telegram has knowingly ignored requests to address hate speech, misinformation, or other criminal content, Durov and his platform could face severe consequences.

The situation mirrors past issues seen with platforms like WhatsApp in India, where unchecked misinformation led to significant societal problems before the introduction of stricter regulations. For Telegram to maintain its role as a defender of free speech while ensuring user safety, it must adopt a more balanced approach to content moderation. Absolute free speech should not come at the expense of public safety, and finding this balance is crucial for the platform’s credibility and responsibility in the digital age.